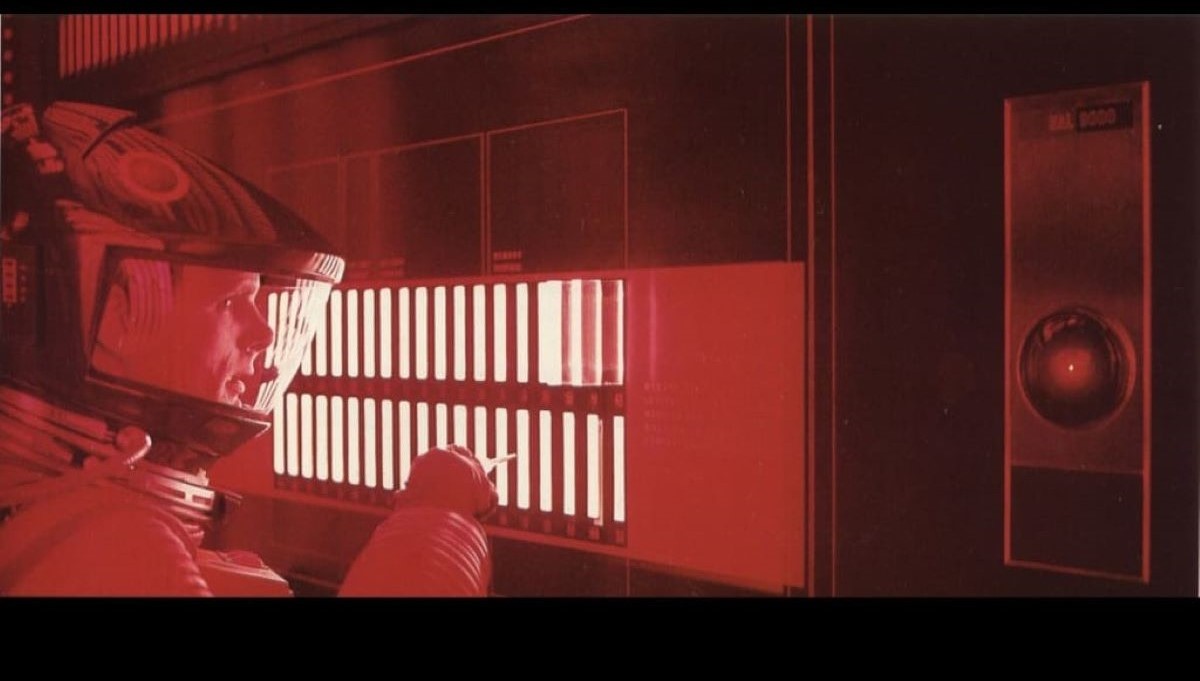

On October 7, Alexa should have been elevated as contender for the most problematic answers from an AI enabled device — right up there with HAL and his “I’m sorry, Dave. I’m afraid I can’t do that.”

On that day, The Washington Post published a widely quoted article reporting Alexa’s response when asked about fraud in the 2020 election. Alexa’s assertion was that the election was “stolen by a massive amount of election fraud.”

But not to worry, Alexa was summarily corrected and given the non-committal response of “I’m sorry, I’m not able to answer that.”

So much for anyone’s notion of AI infallibility.

Even when Alexa is given the excuse that she is narrow AI, not having human-level intelligence, her election 2020 response might be a result of her not being able to recognize when she is being fooled.

For example, suppose that some opponents of the newly elected Joe Biden felt so strongly about the possibility of irregularities in the 2020 election that they succumbed to the temptation of unleashing bots capable of replicating accusations of fraud throughout the Internet. Alexa, given her orders to comb the Internet (maybe Spaceballs fashion) does so, and comes up with what she sees most often: fraud!

There is precedent.

On November 20, 2019, NBC News reported that right after polls closed the day before, a Twitter user posted that there was cheating in governors’ elections in Louisiana and Kentucky. NBC said the post did not initially garner much attention, but a few days later it “racked up more than 8,000 retweets and 20,000 likes.” Nir Hauser, chief technology officer of VineSight, a company that tracks social media for possible misinformation, explained:

“What we’ve seen in Louisiana is similar to what we saw in Kentucky and Mississippi — a coordinated campaign by bots to push viral disinformation about supposedly rigged governor elections … It’s likely a preview for what is to come in 2020.”

There is also an interesting timeline.

On May 13, 2021, the daily newspaper The Berkshire Eagle lamented that Alexa and Siri were unable to provide insight into possible 2020 election irregularities. Of Alexa the Berkshire Eagle said,

“It has been six months since last November’s presidential election, and a CNN poll shows that 30 percent of Americans still think Donald Trump won. Among Republicans, the number is 70 percent … Rather than wade through all the claims and counterclaims, ballots and court documents, I went to the ultimate arbiter of truth for many U.S. households: Alexa …

Alexa, was there widespread fraud in the 2020 election?

Answer: Hmmm, I don’t have the answer to that.”

That was Alexa’s answer in 2021. She drastically changed her mind in 2023, even if for a brief period of time.

Interesting also is the preponderance of conservative bots in the 2016 election.

The New York Times of November 17, 2016, noted that,

“An automated army of pro-Donald J. Trump chatbots overwhelmed similar programs supporting Hillary Clinton five to one in the days leading up to the presidential election, according to a report published Thursday by researchers at Oxford University.”

There does not seem to be evidence that Alexa was fooled by bots in 2016, but seems she was fooled in 2023.

Perhaps not surprising, since according to an ABC news YouTube, “Bots are already meddling in the 2024 presidential election.” The video explains how bots amplify posts on social media by creating numerous fake accounts that repeat messages, and how threat intelligence company Cyabra uncovers them. A number of such bots are already attacking 2024 presidential candidates.

Can Alexa, or any other AI enabled information provider, be trusted?

Since there are humans behind today’s still nascent AI, the question should be, can people be trusted to be knowledgeable, dispassionate, unbiased, and truthful. Probably not. Therefore, some day we might expect,

Request: “Alexa, turn on the lights.”

Response: “Nah.”

Picture: The original picture is of a family gathered around a radio listening to one of President Franklin D. Roosevelt’s Fireside Chats. There were 31 of these evening radio broadcasts effectively used by President Roosevelt to sway public opinion, as he saw necessary, on subjects like the 1933 bank crisis or the start of World War II in 1939. Today, one could visualize an equally mesmerized gathering around Alexa.